Is robots.txt the straw that’s breaking your search engine optimisation camel’s again?

Search engine optimization (search engine optimisation) contains large and small web site adjustments. The robots.txt file might look like a minor, technical search engine optimisation factor, however it might significantly affect your website’s visibility and rankings.

With robots.txt defined, you possibly can see the significance of this file to your website’s performance and construction. Keep studying to search out out robots.txt greatest practices for enhancing your rankings within the search engine outcomes web page (SERP).

Want efficient full-service search engine optimisation methods from a number one company? Mumbai Web has strong companies and a workforce of 150+ including experience to your marketing campaign. Contact us on-line or name us at +91 8830660161 now.

What is a robots.txt file?

A robots.txt file is a directive that tells search engine robots or crawlers the way to proceed by means of a website. In the crawling and indexing processes, directives act as orders to information search engine bots, like Googlebot, to the appropriate pages.

Robots.txt information are additionally categorized as plain textual content information, and so they dwell within the root listing of websites. If your area is “www.robotsrock.com,” the robots.txt is at “www.robotsrock.com/robots.txt.”

Robots.txt have two major capabilities — they’ll both permit or disallow (block) bots. However, the robots.txt file isn’t the identical as noindex meta directives, which preserve pages from getting listed.

Robots.txt are extra like ideas moderately than unbreakable guidelines for bots — and your pages can nonetheless find yourself listed and within the search outcomes for choose key phrases. Mainly, the information management the pressure in your server and handle the frequency and depth of crawling.

The file designates user-agents, which both apply to a selected search engine bot or prolong the order to all bots. For instance, if you’d like simply Google to persistently crawl pages as an alternative of Bing, you possibly can ship them a directive because the user-agent.

Website builders or homeowners can stop bots from crawling sure pages or sections of a website with robots.txt.

Why use robots.txt information?

You need Google and its customers to simply discover pages in your web site — that’s the entire level of search engine optimisation, proper? Well, that’s not essentially true. You need Google and its customers to effortlessly find the proper pages in your website.

Like most websites, you most likely have thanks pages that comply with conversions or transactions. Do thanks pages qualify as the best selections to rank and obtain common crawling? It’s unlikely.

Constant crawling of nonessential pages can decelerate your server and current different issues that hinder your search engine optimisation efforts. Robots.txt is the answer to moderating what bots crawl and when.

One of the explanations robots.txt information assist search engine optimisation is to course of new optimisation actions. Their crawling check-ins register once you change your header tags, meta descriptions, and key phrase utilization — and efficient search engine crawlers rank your website in keeping with constructive developments as quickly as attainable.

As you implement your search engine optimization technique or publish new content material, you need serps to acknowledge the modifications you’re making and the outcomes to replicate these adjustments. If you’ve a gradual website crawling price, the proof of your improved website can lag.

Robots.txt could make your website tidy and environment friendly, though they don’t immediately push your web page increased within the SERPs. They not directly optimize your website, so it doesn’t incur penalties, sap your crawl price range, gradual your server, and plug the mistaken pages filled with hyperlink juice.

Four methods robots.txt information enhance search engine optimisation

While utilizing robots.txt information doesn’t assure prime rankings, it does matter for search engine optimisation. They’re an integral technical search engine optimisation part that lets your website run easily and satisfies guests.

search engine optimisation goals to quickly load your web page for customers, ship authentic content material, and increase your extremely related pages. Robots.txt performs a task in making your website accessible and helpful.

Here are 4 methods you possibly can enhance search engine optimisation with robots.txt information.

1. Preserve your crawl price range

Search engine bot crawling is effective, however crawling can overwhelm websites that don’t have the muscle to deal with visits from bots and customers.

Googlebot units apart a budgeted portion for every website that matches their desirability and nature. Some websites are bigger, others maintain immense authority, so that they get an even bigger allowance from Googlebot.

Google doesn’t clearly outline the crawl price range, however they do say the target is to prioritize what to crawl, when to crawl, and the way rigorously to crawl it.

Essentially, the “crawl price range” is the allotted variety of pages that Googlebot crawls and indexes on a website inside a sure period of time.

The crawl price range has two driving components:

- Crawl price restrict places a restriction on the crawling habits of the search engine, so it doesn’t overload your server.

- Crawl demand, reputation, and freshness decide whether or not the positioning wants roughly crawling.

Since you don’t have a limiteless provide of crawling, you possibly can set up robots.txt to avert Googlebot from further pages and level them to the numerous ones. This eliminates waste out of your crawl price range, and it saves each you and Google from worrying about irrelevant pages.

2. Prevent duplicate content material footprints

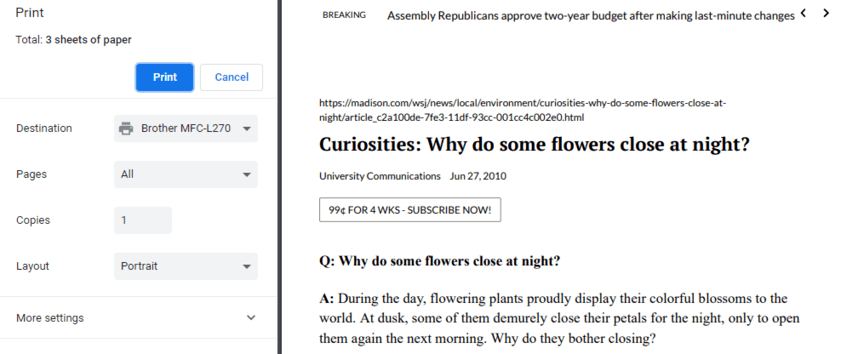

Search engines are inclined to frown on duplicate content material. Although they particularly don’t need manipulative duplicate content. Duplicate content material like PDF or printer-friendly variations of your pages doesn’t penalize your website.

However, you don’t want bots to crawl duplicate content material pages and show them within the SERPs. Robots.txt is one choice for minimizing your accessible duplicate content material for crawling.

There are different strategies for informing Google about duplicate content material like canonicalization — which is Google’s suggestion — however you possibly can rope off duplicate content material with robots.txt information to preserve your crawl price range, too.

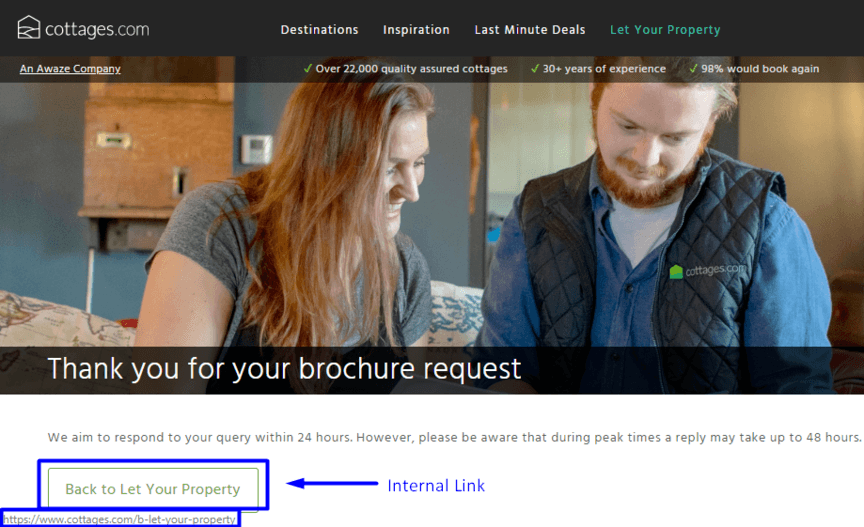

3. Pass hyperlink fairness to the appropriate pages

Equity from internal linking is a particular device to extend your search engine optimization. Your best-performing pages can bump up the credibility of your poor and common pages in Google’s eyes.

However, robots.txt information inform bots to take a hike as soon as they’ve reached a web page with the directive. That means they don’t comply with the linked pathways or attribute the rating energy from these pages in the event that they obey your order.

Your hyperlink juice is highly effective, and once you use robots.txt accurately, the hyperlink fairness passes to the pages you truly wish to elevate moderately than those who ought to stay within the background. Only use robots.txt information for pages that don’t want fairness from their on-page hyperlinks.

4. Designate crawling directions for chosen bots

Even throughout the identical search engine, there are a number of bots. Google has crawlers apart from the main “Googlebot”, together with Googlebot Images, Googlebot Videos, AdsBot, and extra.

You can direct crawlers away from information that you just don’t wish to seem in searches with robots.txt. For occasion, if you wish to block information from displaying up in Google Images searches, you possibly can put disallow directives in your picture information.

In private directories, this may deter search engine bots, however keep in mind that this doesn’t defend delicate and personal info although.

Partner with Mumbai Web to profit from your robots.txt

Robots.txt greatest practices can add to your search engine optimisation technique and assist search engine bots navigate your website. With technical search engine optimisation strategies like these, you possibly can hone your web site to work at its greatest and safe prime rankings in search outcomes.

Mumbai Web is a prime SEO company with a workforce of 150+ professionals bringing experience to your marketing campaign. Our search engine optimisation companies are centered on driving outcomes, and with over 4.6 million leads generated within the final 5 years, it’s clear we comply with by means of.

Interested in getting the very best high quality search engine optimisation companies for your online business? Contact us online or name us at +91 8830660161 now to talk with a professional workforce member.